Note: This is a multipart re-write of a previous series that, when completed, is intended to replace it.

In Part 1 we documented the differences between mathematical and database relations (see table in Part 1). We attributed the fallacy that the RDM can express only one type of relationship -- between relations using FKs -- to the industry being unaware of the adaptation of math relations for database management. We intimated that some of the additional features of database relations express relationships other than between relations.

In Part 2 we identified the intra-group c-relationships (and the corresponding within-relation l-relationships) in our approach to conceptual modeling:

- Properties-entities relationships

- general dependencies

- Properties Relationships

- Entities Relationships

- entity uniqueness

- functional dependencies (FD)

- entity supertype-subtypes relationships

and used a simple conceptual model (CM) of six entity groups to illustrate them:

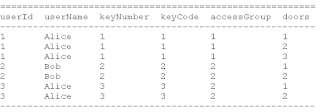

Customers (cID, cname, FICO, discount)

Products (pID, pname, price)

Salesmen (sID, sname, sales, salary, commission)

Orders (oID, pID, cID, sID, date, amount)

Order Items (oID, iID, pID, quantity)

Database design is the use of a data model (DM) (here, RDM) to formalize conceptual models (CM) -- including c-relationships -- as logical models (LM) for database representation, so it must be able to convert the business rules (BR) that express those relationships in specialized natural language at the conceptual level to formal constraints in a FOPL-based data sublanguage at the logical level.

Our intention is to demonstrate that the RDM can express all c-these relationships, but we face a difficulty.